在2025年世界学者杯第18期WSC Weekly栏目中,我们与小学者一起了解了电动车的历史发展脉络。在上期的趣味Quiz中,你是否找到了正确答案?现在就让我们一起来揭晓吧!

你以为赶时髦的电车,

其实在一百多年前满地跑?

The rise, fall and resurgence of electric vehicles in the 20th century

第18期Quiz答案揭晓:

Which of the following NOT a factor to explain the fall of electric vehicles in 20th century? 以下哪项不是 20 世纪电动汽车衰落的原因?

A. Lack of charging infrastructure 缺乏充电基础设施

B. Invention of the electric starter 电动起动机的发明

C. Oil boom 石油繁荣

D. Low travel range of electric cars 电动汽车行驶里程短

E. Increase in electricity price 电价上涨

正确答案:E

Key: E

2025年第19期

Weekly Intro

当ChatGPT、DeepSeek、Kimi等AI工具日益渗透进我们的工作与生活,人类正面临一场隐秘的认知危机。在享受技术便利的同时,我们是否正在被算法同化?本期Weekly将深度剖析"模型坍塌"现象:从AI的重复输出陷阱到伦理风险的暗流,这场关于创造力的反思或许正是人类重拾思维主权的关键转折点。

2025 No.19

为什么感觉现在的AI越来越傻了?

Is artificial intelligence becoming dumber?

“智商下降”的AI

你是否也有这样的感觉:随着人工智能在生活里越来越常见,这些工具似乎不像以前那么聪明了?在人工智能刚刚兴起的时候,它们的聪明程度让人感到惊奇。像 ChatGPT 这样的工具能够总结文章、回答问题,甚至写诗或解数学题。但最近,越来越多的用户反映,这些AI模型变得越来越啰嗦、没用,而且更容易“胡说八道”或给出千篇一律的回答。这种变化不仅仅是用户的主观感觉或对旧版本的怀念。事实上,一些科学家也在发出警告。问题的核心是一种被称为“模型坍塌”(model collapse)的现象,指的是当人工智能模型开始使用其他 AI 生成的数据(而非人类创作的数据)来进行训练时,所导致的一种潜移默化却非常严重的退化过程。

Do you ever have the feeling that as AIs become more and more prevalent in our life, these tools are not as smart as they used to be? At first, artificial intelligence felt magical. Tools like ChatGPT could summarize articles, answer questions, even write poetry or solve math problems. But lately, something feels off. Some users report that these models seem dumber—more repetitive, less helpful, more prone to hallucinations or generic answers. This isn’t just user imagination or nostalgia for earlier versions.Researchers are sounding the alarm about a phenomenon called “model collapse”—a subtle but potentially serious breakdown that happens when AI models begin training on data produced not by humans, but by other AIs.

AI模型吃什么长大的

在人工智能发展的早期,语言模型和图像生成模型使用的训练材料是大量人类创作的数据:书籍、新闻文章、网页内容、绘画和照片等。这些丰富多样的内容帮助模型学习语法、逻辑、语气以及人类的思维和表达方式。但高质量人类数据的供应并非无穷无尽。随着版权保护趋严以及公众对数据使用和隐私的关注增加,AI 开发者越来越多地转向“合成数据”,也就是其他 AI 模型生成的内容,来训练新的模型。起初,这听起来似乎无害:如果 AI 模型能够创造高质量的内容,为什么不能用它来训练更好的新模型呢?问题在于,AI 生成的内容虽然表面上看起来令人惊叹,但却存在“看不见”的弱点。它缺乏人类思维的深度、不可预测性和微妙错误,也继承了前一代模型的局限性。当 AI 在合成数据上训练时,它不仅学到了表面形式,还吸收了其中的偏差、缺陷和错误。久而久之,这些微小的错误就会不断积累。

In the early days of AI training, language models and image generators were fed massive amounts of human-created data: books, news articles, websites, paintings, photographs. This rich and varied content helped them learn grammar, logic, tone, and how people think and speak. But that supply of high-quality human data isn’t endless. As copyright protections tighten and the public becomes more concerned about data usage and consent, AI developers are turning more and more to synthetic data—material generated by previous AI models—to train new ones. At first, this might seem harmless. If an AI model creates high-quality content, why not reuse it to train a newer, better model? But the problem is that AI-generated content, while often impressive on the surface, contains invisible weaknesses.It lacks the depth, unpredictability, and subtle errors of human thinking. It also reflects the limitations of the models that produced it.When AIs are trained on synthetic data, they don’t just learn the surface.They absorb the flaws, biases, and shortcuts embedded in that data. Over time, these small errors compound.

模型坍塌的理论

这正是研究人员所说的“模型坍塌”。在牛津大学计算机科学家 Ilia Shumailov 发表的一项研究中,他们发现当 AI 一次又一次地使用合成数据进行训练,模型的输出表现会变得越来越狭隘、不准确、甚至失真。你可以把这比作反复复印复印件:每复印一次,图像就更模糊,最后只剩下一团糊状物。早期几代 AI 还能产出有用的结果,但如果只是用人工内容的模仿品进行训练,那么每一轮新的训练都会让模型失去一部分细节和真实感。最终,AI 会“忘记”如何真实地反映世界。

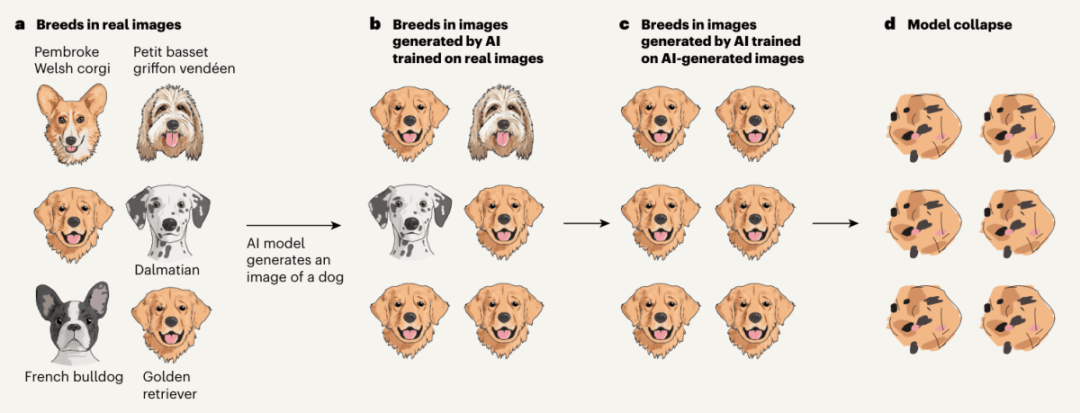

一个例子来自图像生成领域。在一项研究中,一个原本接受了各种犬类品种图像训练的AI模型,后续使用另一个AI模型生成的犬类图像再次训练。结果,这个模型开始偏爱输出金毛猎犬图像,因为金毛的图像在合成数据中更常见。最后,它几乎不再生成稀有品种狗的图像。语言模型也是如此:罕见词汇、方言、冷门观点甚至真实事件,如果不能持续地被人类创作的材料所代表,这些元素都会逐渐从 AI 的输出中消失。

This is what researchers call model collapse.In a paper by Ilia Shumailov and colleagues, scientists found that when AIs are trained repeatedly on synthetic data, their performance gets narrower, less accurate, and more distorted. Think of it like photocopying a photocopy over and over—the clarity fades, and eventually you’re left with a blurry mess. Early generations of AI may still produce useful outputs, but each new round trained on synthetic content loses some nuance. Over enough cycles, the AI starts to “forget” how to reflect the real world at all. One example is image generation. In one study, a model originally trained on images of many dog breeds was then re-trained using images generated by another model. The result? It began to favor golden retrievers—because those were more common in the synthetic dataset. Eventually, it stopped producing less common breeds altogether.The same principle applies to language: rare vocabulary, dialects, ideas, and even factual events may slowly vanish from an AI’s outputs if they aren’t consistently represented in fresh, human-authored material.

模型坍塌的后果

模型坍塌的后果不只是内容的重复。研究人员指出,使用合成数据还会加剧多种错误:包括模型结构本身的设计缺陷(架构性错误)、因数据偏倚而产生的训练误差、以及模型将虚构内容误认为真实世界信息的统计性误差。随着模型摄入的合成数据越来越多,它们会越来越倾向于“抹平”所有异常,变得更千篇一律、更刻板印象、也更“笨”。而这一过程很难被察觉,因为 AI 生成的文字或图像表面看起来光鲜亮丽。但在其背后,关键的信息已经缺失。模型对错误信息过于自信、输出内容重复、逻辑简单、甚至编造看似合理却并不真实的“幻觉”。尽管这些 AI 看似运行更快、成本更低,用户体验却实实在在地在退化。此外,合成数据也带来伦理风险。如果模型遗忘了如何表达稀有语言、边缘社群或多元文化的观点,它们将加剧主流文化偏见的统治。训练数据的多样性消失,不只是让模型失去准确性,也让它们变得更加不公正。

The risks extend beyond simple repetition.Researchers identify several types of error that become worse with synthetic data: architectural errors, which come from flaws in the model’s structure; training errors, which stem from biased or unbalanced data; and statistical errors, which emerge when a model mistakes synthetic content for a true representation of reality.As AIs are fed more synthetic data, they begin to smooth out irregularities, becoming more generic, more biased, and ironically—less intelligent. This process is difficult to detect. AI-generated text or images often look polished. But under the surface, important signals are missing. Models become overconfident in false information. They repeat bland, predictable patterns. They hallucinate facts that sound plausible but aren’t true. The user experience starts to degrade—even if the AI is faster or cheaper to run.There are also ethical dangers. If models forget how to represent rare languages, marginalized communities, or underrepresented viewpoints, they reinforce dominant cultural biases. The loss of diversity in training data doesn’t just make models less accurate—it makes them less just.

AI未来发展的反思

那么为什么人工智能公司会允许这种情况发生?原因部分在于现实考量:高质量人类数据越来越昂贵且难以获取,随意抓取网络内容又会引发法律和道德问题。相较之下,AI 自己生成的数据则廉价、丰富且没有版权负担,这无疑很有吸引力。但这种便利是要付出代价的。就像人类需要真实的经历和多元信息来成长,AI 也需要新鲜、真实的人类创作来维持准确性和实用性。科技企业和研究人员必须投入资源,确保“人类数据”继续成为 AI 学习的核心来源,而这需要人类持续创作高质量的新闻文章、书籍、随笔、真实对话和专业知识。如果我们忽视这一点,那么在未来等待我们的将是一个像回音室一样只会自我复读的,每天都在变“笨”的人工智能。

Why would AI companies let this happen? In part, it’s a practical problem.High-quality human data is expensive and harder to obtain. Scraping the internet raises legal and ethical issues.By contrast, synthetic data is abundant, easy to generate, and comes with no copyright strings attached. It’s tempting to reuse it. But this convenience comes at a cost. Just as humans need real experiences and diverse information to grow,AI systems need fresh, human-created data to stay accurate and useful.Companies and researchers will need to invest in keeping human data in the loop: news articles, books, essays, real conversations, and expert knowledge. Otherwise, we may be building a future where artificial intelligence only reflects itself, like an echo chamber growing dumber by the day.

Weekly关键词 Key Words

►model collapse 模型坍塌

所属话题

#The Generative Area: A Mind for Imagination

相关阅读

https://www.euronews.com/next/2024/07/31/new-study-warns-of-model-collapse-as-ai-tools-train-on-ai-generated-content

Weekly FUN Quiz

相信现在你已经了解了“什么是AI模型坍塌”!那就快来参与本期Weekly FUN Quiz👇,告诉老师你的答案吧!

Quiz

Which of the following scenarios best illustrates the concept of “model collapse”?

以下哪种情景最能说明 “模型坍塌 ”的概念?

A. A dog ate too much cookies and became allergic to them 一只狗吃了太多饼干而对产生它们过敏

B. A snake was so hungry that it started to eat its own tail 一条蛇饿得开始吃自己的尾巴

C. A balloon broke due to too much pressure 气球因压力太大而破裂

D. A student studied too hard that his cognitive function was damaged 一个学生学习太刻苦,导致认知功能受损

E. A computer that blew up due to high CPU temperature CPU温度过高导致电脑爆炸

To WSC Scholars:

本期Weekly Quiz正确答案将在专栏下期推文中揭晓!欢迎小学者们关注服务号,进入“WSC Weekly”专栏,此栏目将会持续陪伴小学者们,分享更多WSC趣味学术知识!